EP103: How The Spotify “Algorithm” Actually Works

"The Spotify Algorithm". "Game the algorithm". "See, here's how the algorithm works..." Let's be honest - when it comes to any algorithm (including Spotify's Recommendation Engine), most people don't know what the f*** they're talking about. Still, people line up to pontificate about the inner workings of "the algorithm"...

In this episode Circa and Corrin discuss the work of Spotify's machine learning engineers in creating the Spotify Recommendation Engine. You'll learn how it ACTUALLY works, including everything we know and the things we still can't know. You'll dispel countless mythical book chapters and tutorial videos floating out there on the internet, AND...

You'll finally know how the Spotify "Algorithm" works and what it means for YOUR music career. So, if everyone in your music scene can't stop themselves from morphing into armchair data scientists, you're going to want to listen closely to this one!

DISCOVER:

RESOURCES:

Episode 103:

Circa: It gets so much crazier than just what we've gone over. We don't have enough time in this podcast to go over all of the stuff, which is why there's going to be a lot in the resources section.

Corrin:

I don't have the brain capacity to go into all this in this podcast episode.

Circa:

It excites me so much.

Narrator:

You are now listening to the Creative Juice Podcast, brought to you by Indepreneur.io.

Circa:

What's up Indies? Welcome back to the Creative Juice Podcast. I am your host, Circa. With me as always is my co-host, Corrin.

Corrin:

Yo, yo, yo. What's up?

Circa:

What's good with it?

Corrin:

I'm good. I'm just in a good mood today, I guess. I'm dancing in my chair a little.

Circa:

Yeah, me too. We finally got some carpets in here at Orlando HQ to cut down on the bounce in here.

Corrin:

We're fancy, yeah.

Circa:

So yeah, things are finally coming together. The room's starting to look put together behind me in the video. I know that you guys have your own challenges with Nashville, but we're coming to the finish line over here.

Corrin:

Yeah, I was telling the Indies on Live yesterday, "It looks okay right here. It's not very decorated, but it looks good. And then beyond the camera is just, things are everywhere, so we're still getting it figured out." We have all our books on our bookshelf, so that's kind of an easy part.

Circa:

That's exciting.

Corrin:

Yeah! But hey, they're not on the floor, so it's great. Win!

Circa:

Right, that's the best, yeah. Well, today we have another nerdy kind of episode, but I think it's going to be really cool because we had another nerdy episode recently where we talked about death of websites, which is a very nerdy topic. This topic is equally nerdy and difficult to understand, but it has the added benefit of being something musicians talk about all the time.

Circa:

Just like marketers were talking about, "How does Google's search rating algorithm work? I've got the hack." That's what's going on with Spotify right now for musicians. Everyone's positing how the recommendation engine works, everyone's heard this tidbit or that from a Spotify business side representative. And it seems like there's no one who is actually like, "No, this is nuts and bolts how it actually works."

Circa:

The only people who are really suited to do that are the people who developed the recommendation engine at Spotify. So what we did, just did a deep dive on conference presentations from Spotify's development team. So I went to LinkedIn, I found a little thread that I could pull like a trail of breadcrumbs by finding the first engineer that I could that was representative for the machine learning side of Spotify's development team, and then I found their coworkers, I found relevant titles at Spotify for people who are in that section of the business, building their recommendation engine, and then I just cross-referenced all of their names and titles with YouTube, with Facebook Video, with Vimeo, and found all of these presentations that they've given to other propeller heads like themselves about their work at Spotify.

Circa:

So all the information was contained there. Everything we're about to talk about in this episode is literally just pouring over those developer conferences and listening to what the developers actually have to say about what they built.

Corrin:

Yeah. That's really cool too because I think there's a lot of speculation, and the people who are saying different things about how Spotify works are probably taking information from their experience, right? I don't think anybody's making anything up, but I do think that they're potentially taking what they've experienced and kind of interpreting that data, which is probably very, very limited compared to the actual data that's out there, and how things work. So obviously if there are people who have built this, there's no one who knows it better than them.

Corrin:

So I'm excited. I mean, I'm going to be learning along with the rest of you guys quite a bit because Circ definitely dove deeper into this than I ever have. But I'm excited to hear all the things.

Circa:

Hell yeah. First, before we talk about this, it's important to sort of learn how machine learning works a little bit. Machine learning works by a process of training a machine learning algorithm on a data set. So basically, you give the machine learning algorithm this data set. For instance, all the YouTube videos are on YouTube and how many times they've been watched by which users, that might be a gigantic data set.

Circa:

You set the machine learning algorithm loose on that data, and you tell it, basically by manner of programming, you tell it to find videos that certain users would like based on this data set, right? That they haven't actually watched yet. So you can train it on this, and then get positive hits, and then test it, and see, is the machine learning algo right?

Corrin:

Right.

Circa:

So you can give the machine learning algo positive and negative feedback, and it will just course correct itself. This type of computing is called neural networks, and basically they work with a solution, they train with a solution to find the problem, sort of, if that makes sense.

Corrin:

Yeah. That's crazy, too, because if you think about Spotify watching you, right, in a not creepy way, but the way that you engage with various songs, not just how many streams you get. I feel like when I hear people talking about various pieces of this, they're like, "Yeah, how many streams something is getting, or how many saves something is getting, or how many adds to a playlist something is getting," and so those are the hacks that are out there.

Corrin:

In reality, it's looking at consumption and behavior, right?

Circa:

Yeah.

Corrin:

And things that are so much more complicated than simply the number ... not that those numbers are irrelevant, but they're just very, very top layer of things that are being evaluated by this machine learning. You know?

Circa:

Yeah. And that's just like a 30,000 foot view of machine leaning. There's so much to talk about neural networks. I'm going to put in the resources here some great YouTube series to follow. I'm not a mathematician, the rest of my family is, but I never got into it, and I can't do the math on this, but I've watched this YouTube series where complex mathematicians basically break down how neural networks work. It's really freaking interesting. It's sort of like there's a YouTube series where they start off with like, "This is how two dimensions works, then this is how 3D works, now 4D and 5D." They take you into the 11th dimension, piece by piece.

Corrin:

Oh my gosh.

Circa:

So you could know nothing about complex extra dimensional math, but you kind of get their conceptually because they walk you through it, and they do the same thing with neural networks. So I'll put it in the show notes, but basically, let's bring it down to the ground level. Here's an example of machine learning, what I just described, where you give the machine a problem to train on, and then set it loose in real life.

Circa:

The way that they train the Discover Weekly recommendation engine, the model they use to populate your discover weekly, I'm not 100% sure that this is the only model they use, but it's one of them, is they will take a random sampling of playlists on Spotify, actual playlists, like user generated popular playlists, and they will, let's say it's 30 tracks in length, they'll take out one track, and then they'll train the machine learning algorithm where the only positive feedback is if it guesses the exact track that they removed.

Circa:

So they say, "Okay, try a million different things, try to get the right track," and it can go through literally millions of guesses in a very short timeframe because it's a computer.

Corrin:

Right.

Circa:

Only once it starts guessing every ... Okay, let's say you train on one playlist until it guesses that track, and then you take the settings that you used to derive that answer, let's go to another playlist. Can you find the mission track in this one? Once they've trained this algo to a point where it can just reliably guess the missing track in any playlist, then they take your user history, and they tell it to guess the missing track.

Corrin:

Ahhh. That's insane, though. It's almost ... because my understanding of this algorithm, like I understand algorithms, and I nerd out on some machine learning stuff, but I've never done it, right? I'm not a developer.

Circa:

Right.

Corrin:

So I'm always impressed with this stuff, because it's the things that we go to college for, right? We have people who go and get their PhD in Psychology, and they're evaluating behavior, right? And evaluating things, and trying to identify what caused such problem, right? So they're almost solving social equations, and you have to go to college for years, and years, and years, and years and study tons of different people before you have kind of like an instinct or even the logic to figure this stuff out.

Circa:

It's nuts.

Corrin:

Yeah, it's crazy that ... Yeah, it's such a cool world we live in, which is why, when Circ says that soon creativity will be one of the only things automated, this is a perfect ... or the only thing that isn't automatable, right? This is one of those things that really illustrates exactly what that is.

Circa:

Yeah, and to a degree, the Spotify recommendation engine is making decisions that we would typically foist onto the realm of "creativity".

Circa:

Putting together a playlist that people are going to enjoy, that follows a narrative in terms of energy is something that we would consider a creative task, like a DJ does it.

Corrin:

True. Well, I mean, that's crazy that the machine's probably doing it better than a curator. You know what I mean?

Circa:

Right! That's so crazy!

Corrin:

So I have a question. Does the algorithm do this based solely on user activity across its hundreds of thousands or millions of users, however it is?

Circa:

No.

Corrin:

Or does it analyze beats?

Circa:

No, no.

Corrin:

What all is up in this?

Circa:

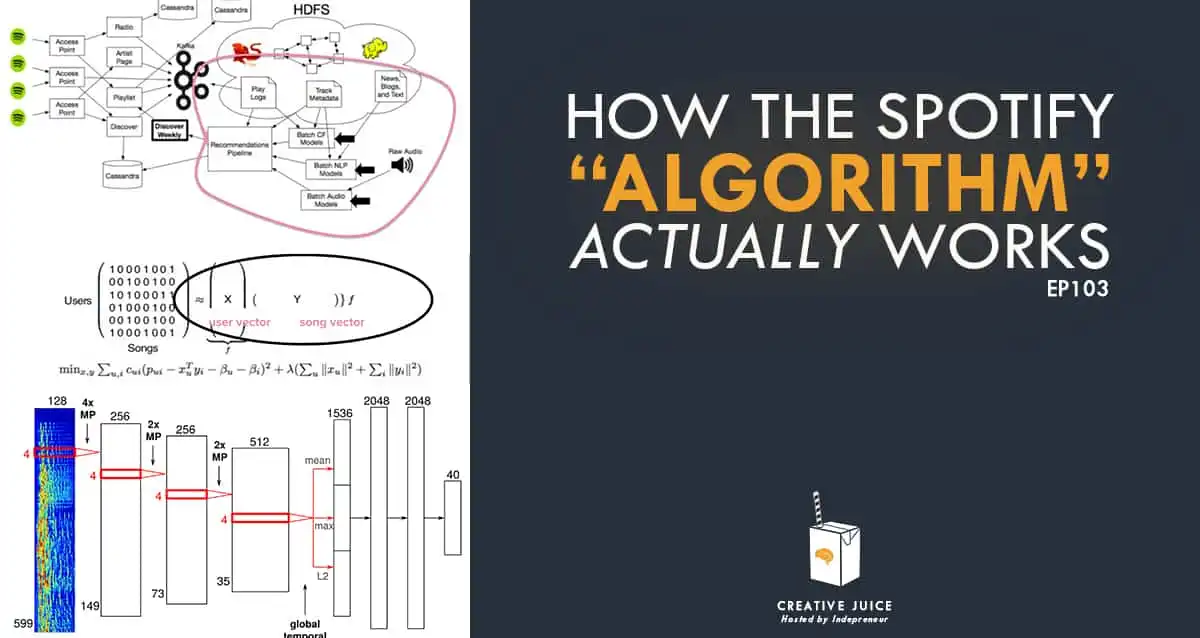

Yeah, perfect question to get into it. Okay, so when you go and visit Spotify, let's say you're on a desktop or mobile, you go to visit Spotify, and your home page populates. Now, your homepage has its own recommendation engine behind it, which is based off of a lot of the math we're going to go into in a second. But let's say you go into an algorithmic playlist, or just your homepage, anywhere where the algorithm is invoked, and it's really a recommendation engine. I've been trying to train myself off of calling it an algorithm, because it's actually like many, many algorithms.

Circa:

It's a recommendation engine. So let's say you visit your Discover Weekly, for instance. Your Discover Weekly is fed by what is called the recommendations pipeline, which is like a storage house for the mathematical calculations of the recommendation models. So recommendation models discover, for this user ID, based on what we know right now, what are the right songs.

Corrin:

Whoa.

Circa:

And it feeds those recommendations into a pipeline, which then gets distributed out to you, and most of these things are updated daily. Now, there's three recommendation models behind the pipeline, and the primary one is what they end up calling ... I mean, there's many different ways to describe it, but I like to call it the latent vector space-

Corrin:

Oh my gosh.

Circa:

... it is what they call it, and it seemed like the easiest way to understand it. Okay, basically every song ID, every user ID, every artist ID exists in a two or three dimensional, depending on how you calculate it, space, like a physical space. And proximity to each other equals likelihood of similarity.

Corrin:

Right.

Circa:

Right? So if my user ID is close to this song ID in the latent vector space, then it is likely that I'll enjoy the song if I've never heard of it.

Corrin:

Right.

Circa:

The way that they calculate that is they take what's called, they use matrix math, which is really intense math, I can point you to a mini course from Conn Academy that I took to try to just wrap my head around what was going on.

Corrin:

Oh my gosh.

Circa:

And it's great. It'll walk you through it pretty well, but you'll definitely go cross-eyed a few times. But ultimately, in mathematics, a matrix is like a set of brackets, and in those brackets is rows and columns of numbers. Right? So you might think of it like a grid of numbers.

Corrin:

Yeah, like The Matrix dropdown screen, right?

Circa:

Yeah.

Corrin:

Those are all numbers in rows and columns.

Circa:

Exactly, right. So let's say on that screen there was 5,000 rows and 3,000 columns or whatever of numbers. That would be a gigantic matrix. Now, Spotify's matrix that they start out with is every user ID, and every song ID in Spotify, which is ... User IDs are rows, so that's 217 million rows-

Corrin:

Oh my gosh.

Circa:

... and it's estimated somewhere north of 60 million columns. Right? So let's say I go to Corrin's User ID in the matrix, and then I go to one of her songs, right, in the columns. At that intersection of her row and the song's column, there should be some data there to tell me, "She's streamed this song." Right?

Corrin:

Okay.

Circa:

Yes, that's a positive.

Corrin:

Right.

Circa:

But what I don't know, what I assume, I don't know this for sure, but I believe that they have a way of saying that you've listened to a song more than once, so it's not just zeros and ones, it's not just binary, but I don't know that for sure because they represent it as binary.

Corrin:

Right.

Circa:

Okay, so then they use a process, a calculation called matrix factorization. I have it on the screen right here. Oh yeah, so they have different variables here. It's one if a user has streamed a track, otherwise it's zero. Yeah, so anyways, they run this big calculation, and it basically spits out two numbers. One is the vector, the latent factor vector for the user, and the latent factor vector for an item, which is a song.

Corrin:

Right.

Circa:

Basically meaning it spits out coordinates for where these things should go in latent vector space. It takes all the data about who has streamed all these tracks? What other tracks have those users streamed? And they run this complex matrix factorization to arrive at a set of coordinates for where songs and users should sit in latent vector space. They remake the vector space, I think every day.

Corrin:

Oh my gosh. Okay, okay, okay. Wait a second. I'm like, hold on, because Circ is going to go down the rabbit hole here. Can you talk about this like, okay I'm in a room, can you explain this in some way in the common physical world? I know we're taking something that's kind of like ... Like if anyone's ever used Music Map, that's the most simplistic version of this that we could be talking about.

Circa:

It's sort of like it, but not really.

Corrin:

Right, yeah, exactly. Can you make this something in the tangible world? Is there a way that you can kind of illustrate?

Circa:

Totally, totally. Let's say that your row in the Matrix and my row in the matrix are almost identical, right?

Corrin:

Okay.

Circa:

Except for one song that I've streamed and you haven't.

Corrin:

Okay.

Circa:

That means that the one song that I streamed and you haven't is so close to you in the latent vector space that it is like the primary candidate to get recommended to you in some way. Does that make sense?

Corrin:

Yeah, yeah, yeah. So depending on how similar users look in the tracks that they're listening to and what the algorithm has said that these various users have listened to, they're almost like grouping people together by the things that they've liked, and comparing the rows, or the columns of the things that they've listened to, and figuring out, "Okay, where's the hole?" Like they have all these ones that match, and here's the ones that only this person has listened to, and here's the one only this person has listened to, so now I'm going to basically switch them over kind of, so that I'm suggesting these other songs.

Circa:

Right.

Corrin:

Yeah, I got it.

Circa:

If it were monofactorial, if it was just like, "Okay, find me all the users who are almost identical, but except for one song."

Corrin:

Right, right.

Circa:

And then let's recommend those songs to people. That might be a very simplified version of what the recommendation engine would do.

Corrin:

Very, very simple.

Circa:

But then you add in find me all the songs that have been streamed by almost the exact same users, except for one, and then recommend it down the chain. Okay, now you're adding in another factor. So the matrix factorization allows you to deal with three or four different factors, and arrive at an actual physical space, basically.

Corrin:

Right.

Circa:

And it's not ... So when they, because they do, in these developer conferences, they will pull up a graphic version of the latent vector space, but they're doing it so you can understand better, because that's not where they operate. Right?

Corrin:

Right.

Circa:

They're not like flying through the latent vector space, sniping out nearby neighbors to your user ID. That's not how it works.

Corrin:

Yeah, Star Trek galaxy stuff.

Circa:

It's not like a video game.

Corrin:

Yeah, exactly.

Circa:

It is just a visualization.

Corrin:

Right.

Circa:

But it's a great way to understand what's going on. They show you different renderings of the vector space, so they show you some renderings where color represents genre groupings. So as it gets closer to this hue, you're getting more into the hip hop, and then the deepness, the actual lightness of the color represents streaming frequency. So you can look at a person's ... I can look at your personal vector space, and just look at song IDs and artist IDs, and where they all fit.

Corrin:

Right, right.

Circa:

And look at your streaming data, and it can show me depth. So there's lots of different things that they can do. They can even, there was one developer who was talking about, "Once we run this matrix factorization, I can go to it and say Nirvana's Smells Like Teen Spirit plus a song by Eric Clapton, minus Nirvana's Unplugged version of Smells Like Teen Spirit, what does that equal, and the algorithm will spit out the Unplugged version of the Eric Clapton song."

Corrin:

That's crazy.

Circa:

No bullshit.

Corrin:

Yeah, yeah, yeah. That's nuts.

Circa:

Yeah, so they can run simple math operations on it.

Corrin:

Well, especially considering-

Circa:

That's the main way.

Corrin:

... those aren't artists that would be considered similar. And this is why, I've always wondered this-

Circa:

No.

Corrin:

... because on Spotify, I don't listen to Discover Weekly very often, and I like the very particular things that I like because I listen to most genres of music. I sway more towards rock music, some of the really ... like Max Martin, Dr. Luke kind of stuff, and electronica, like Bass Nectar, and Zedd, less pop-y Zedd, like deep cut Zedd. So I always wonder when I do go over to Discover Weekly, I'm always like, "How is Spotify figuring out that super weird mix, and mixing them up in Discover? I'm not just getting rock music."

Corrin:

Then they've got those like Daily Mixes, like one through four, and as I view ... because I used to be solely an Apple Music kid, partially because they pay artists better, and I have all Apple everything, Apple Watch, iPhone, all that stuff. So when I moved over to Spotify and started listening more, the more I listened, the better it got about things to recommend to me. But even in these various genres, and I think that's something that we don't think about as artists, is that if you're a rock musician and you have fans, it doesn't mean they're only rock musicians, rock fans. You know what I mean? There's a lot of other things that they listen to.

Corrin:

So of course, Spotify would have to be able to figure out, "Okay, here is this user, and they listen to this purple stuff and this green stuff, even though those things are nowhere close to each other." You know what I mean?

Circa:

Yeah.

Corrin:

It's crazy.

Circa:

It's very nuts, and it gets so much crazier than just what we've gone over. We don't have enough time in this podcast to go over all of this stuff, which is why there's going to be a lot in the resources section.

Corrin:

I don't have the brain capacity to go into all this in this podcast episode.

Circa:

It excites me so much. But you can go to Indepreneur.io/episode103 to get all the show notes, and just do your own deep dive.

Corrin:

Dude, this is going to be the podcast episode that has like 25 resources. Usually our podcast pages have like two or three, and Circ's going to be like, "Here's the top three dozen resources you should go check out."

Circa:

Yeah. For real. But yeah, so that's the first model, and that's the primary model. That's the model that they use, for instance, to train Discover Weekly.

Corrin:

Right.

Circa:

The data set that the Discover Weekly recommendation engine trains on is this latent vector space, is what they call it. It's where all the song IDs and user IDs live based on the data that it has from the matrix. So the matrix is just like the streaming record of every user, every song. It uses that to spit out this proximity, like this relationship based categorization for every song and user ID. And that's just so fucking nuts anyway.

Circa:

So you move on from there, that's the main model that feeds the recommendations pipeline. The second model, which my jaw dropped when I first found it out, and obviously we here at Indepreneur don't like to hear these things, but dogma is expensive, so we try not to be dogmatic. But ultimately, the second model of Spotify's recommendation engine is based almost entirely on articles on the internet, like blog PR, and playlists on Spotify, like playlist campaigns.

Circa:

So Spotify realized, "Okay, well we don't have a lot of data to tag up all these songs, and find out more about these songs and these artists, so we need to start using other data sets, we need to incorporate them into our data set." So they trained a model to read from relevant music outlets, places where music is described, which includes like music journalism, but it also includes music metadata out there on the internet. For instance, on Last FM, which feeds Spotify in a big way.

Corrin:

Right.

Circa:

There's just a lot of data out there that they could pull from to jumpstart or bootstrap their latent vector space.

Corrin:

Right, right.

Circa:

So every other model that Spotify feeds back into the latent vector space, in several ways. Basically what they do is they run natural language processing, which is the same technology that we use to develop automated artificial intelligence chatbots, or to develop artificial intelligence driven journalism, or translation algorithms and things like that. So natural language processing, NLP, and they basically breakdown the text. Whenever your song or your artist name is instantiated on the internet somewhere that they're checking, they read the text and look at the proximity of words, the other terms, to your artist name.

Circa:

So a term like exciting, or bluesy, or Jack White, these are all terms that might be descriptive of your music, and it scours for relationships between your song name or your artist name and these terms, and then it attaches that data set to your artist ID or to your song ID in the latent vector space and uses similarity between your song and other songs in terms of the NLP data to make more recommendations.

Circa:

Essentially, you might think you're already existing somewhere in the latent vector space, so you're already there, your song ID is already there, but they don't know enough about it, so maybe they're a little bit fuzzy on where, specifically, it should be. This data strengthens that signal, and allows them to nudge it closer to its best location in the vector space. So ultimately, if you're out there, if you get 10 blog articles in places where they're checking, where they talk about how you're like the Rolling Stones, your dot in the vector space moves closer to the Rolling Stones, as far as I understand.

Corrin:

Right. That's crazy. Okay, so the other thing, too, we always have been like, "Oh, blogs are dead now, you know what I mean?" And it's funny that now this blog placement, or mentions online or whatever have kind of shifted back into a level of importance for an entirely different reason than it used to be important, right? I think that's crazy, and I also think that it's just like whether ...

Corrin:

Okay, okay, so let's say that you hire some company, some PR company to get you placed on a bunch of blogs, so this machine is going to be able to figure out like, "Okay, what are the types of artists that are being published on this blog?" Right? It probably has the capacity to be like-

Circa:

Yeah, totally.

Corrin:

... "This is a more legit blog. This is a blog that has no relationship between these artists, whereas this section of the Billboard blog is like the rock section." You know what I mean?

Circa:

Well you also might imagine that it's training to make recommendations, those recommendations get positive and negative feedback, which tells it information about whether it was wrong or right.

Corrin:

Right.

Circa:

So any element of this might learn over time, and that's going to become important later.

Corrin:

Yeah.

Circa:

Yeah, so it's dumb, it just reads the text, the webpages, if it's a text document, but over time it might start to ignore things like links out to other articles that have the artist's name, because that's not relevant. You know what I mean?

Corrin:

Yeah. Yeah, absolutely. It's almost like it's going to put more pressure ... Blog placement has now come back into a place where it could be relevant, but at the same time, it puts more pressure on only getting your stuff fed to blogs that you are of a little bit more-

Circa:

Are relevant.

Corrin:

... yeah, that are more relevant, more significant because-

Circa:

Not only that, but it's like metadata is equally as important.

Corrin:

Right.

Circa:

You know?

Corrin:

Right. Yeah, absolutely.

Circa:

Any text document that contains your artist name or your song matters, it could matter. It's so weird.

Corrin:

Yeah, totally. That's so crazy, man.

Circa:

It is nuts. Okay, so they built this model to scour the internet and steal metadata from other data sets. Great. Then they were like, "Well, we've actually got a shitload of text documents that have this data in it that we own," which is their 2.2 billion playlists, I believe is the number.

Corrin:

Right, right.

Circa:

So if they treat these playlists like text documents, which is artist and song names, and a description, and a name, they can train their NLP models on that to find associations between artists and songs. So they also do that, but they only do it for playlists that they consider "good". I've heard multiple developers say, "We do this with good playlists."

Corrin:

So like ones that you pay to be placed on, like that people don't engage with.

Circa:

Not necessarily, though.

Corrin:

Well no, no, no, no. I don't-

Circa:

There are plenty that you don't.

Corrin:

Yeah, yeah, oh I totally get that. But the ones that you pay to be placed on but don't actually make sense, or don't have user engagement, it's parsing that out. You know?

Circa:

It's not even helping you.

Corrin:

Right.

Circa:

Yeah, because ultimately, there's two things that go into you getting recommended, and it's how proximal are you to things that people actually will like your music also like in the latent vector space, and how popular is your music? Those two things alone. Because let's say you're really close to the Rolling Stones, but Rolling Stones' fans hate your music, that's a disaster for you.

Corrin:

Right.

Circa:

Even if you're really popular, your shit will go down.

Corrin:

Yeah.

Circa:

So accuracy is what matters.

Corrin:

That's crazy, because I know that as much as I don't pay attention in Spotify, there were two instances where my songs ended up on Discover Weekly for a good period of time, and one of them was triggered when I played Warped Tour '16, and I got put on not even the Warped Tour playlist, because Warped Tour, yeah, it's known for its alternative rock and even like screamo metal type stuff, but there's lots of artists on Warped Tour, especially on the discovery stages that are not typical rock artists. Katy Perry did it back in 2008 or 2007, whatever it was.

Circa:

Right.

Corrin:

So there were a lot of different artists on that, but there was a user playlist that put together Warped Tour '16, but he only put on like the rock artists that he liked, and he put on even the small ones. But I actually contacted him and I was like, "Hey, thanks for putting me on your playlist because I'm getting a ton of listeners from that," and he was like, "Yeah, well I just went through and listened to the artists when they announced the lineup, I put on the ones that I liked so that I could learn their best songs so that when I showed up to their show I would know some of the songs even if they weren't artists I knew." You know what I mean?

Corrin:

So he was sharing that playlist, and people, when you search for Warped Tour '16, it actually pops up higher than the Official Vans Warped Tour '16 lineup playlist. I think it's probably because he kind of lumped these ... he just put rock artists on it, and it didn't have this mix. So there was one spike I had on Discover Weekly that was that, and then another was a playlist that Tyler Smith of Danger Kids had, and it was all very, very similar to my genre of music.

Circa:

Right.

Corrin:

That was another spike, because he's got a big following, just as a person. Danger Kids plays in like Journeys' stores, you know?

Circa:

Right.

Corrin:

So everyone that he put on there was kind of a fit for that, and that was another spike, and it stayed on Discover Weekly for like five months or something. It slowly tailored off, you know? But there was a point where my monthly listeners was in the 80k range, and I was like, "Why is this happening?" Because these are both small playlists that have a few thousand followers, nothing huge, but somehow that's just what it turned out to. So now I kind of understand how that happened.

Circa:

There's just so much that goes into it that it's hard to really pin down one thing, and it's sort of, you have to develop like a sort of system-wide blanket approach, because if you get specific with every method, there's a lot that you could optimize in the wrong way. And it's still settling, so who knows where it'll be in five years.

Corrin:

Right.

Circa:

But yeah, it's just crazy to know not only just what playlists you end up in matter a lot, in terms of being ... insofar as your goal is to get recommended a lot in Spotify. But also, it's entirely possible that this type of math is being factored into the search results of Spotify.

Corrin:

Right.

Circa:

So if your playlist shows up for a certain artist when it's searched, it could be the popularity of your playlist, plus the instance of that artist's name in your playlist, including the description.

Corrin:

Right. It just means that your music needs to be good. You know what I mean?

Circa:

Yeah.

Corrin:

Yeah, I mean, it's not just about sending people to your stuff, and I really dig that actually, because I've always thought I don't like the hunting down curators thing, which I know it can work if done correctly, and I also don't like nagging people to pre-save stuff or whatever. The fact that so many factors are going into this, you should still make those efforts to promote your music, obviously, but the fact that there's so many other things integrated into it really means that all of these beat the algorithm or game the algorithm kind of strategies are, just like in Facebook and in Instagram, this is not going to work. I think it puts a lot of pressure on ethical white hat stuff, which is what we want. That's the Indepreneur way.

Circa:

That was sort of a big realization for me, is we have always said, "Don't be in listen pods and engagement pods, and don't spam people with your link to Spotify, you could get all types of wrong people there, and don't get on sketchy playlists where you're going to run up your listenership with people who aren't real or who listen to a bunch of weird shit." And it's all here. Insofar as you are using tactics that involve your song being streamed by people who not only don't actually like your song, but listen to themselves listen to a bunch of stuff that isn't your music, your type, the recommendation engine thinks that you're in the completely wrong space.

Corrin:

Right.

Circa:

And everyone they recommend you to hates it.

Corrin:

Yeah.

Circa:

So they're constantly being told, "Hey, this artist shouldn't be recommended, and we actually don't know where they should be in the vector space." They're like unrecommendable. There you go.

Corrin:

Right. Then they like search for your artistry online and there's nothing, you know?

Circa:

Yeah.

Corrin:

There's no documentation, or there's no blogs, or there's no whatever, and it's like, "Oh yeah, well this is just something that we don't need to show anybody."

Circa:

There's nothing we can do.

Corrin:

Yeah, exactly.

Circa:

Yeah, and that's a big problem, is like if we don't know where this is supposed to go, and ever time we try to recommend it to get some more streaming data on it, it's a negative experience for the user, there's nothing we can do. Hands are tied.

Corrin:

Yeah. It's crazy.

Circa:

Another issue that they had, which is why they have a third recommendation model, is something they call the cold start problem, which is everything that we do is based on songs getting written about, songs getting included in playlists, and songs being streamed by our users. If none of those three things have happened yet, we have what is called the cold start problem. There's no streaming data. Like a track's brand new, this is a classic cold start issue, right?

Corrin:

Right.

Circa:

There is no streaming data, the track's brand new, it hasn't been written about, it's not in any playlists, there's no metadata out there on Last FM or where have you, wherever they're checking. So for a while, Spotify simply could not recommend those songs safely. They just couldn't do it. They could maybe use info about your artist ID to make a guess as to what type of music this was.

Circa:

So anyways, a summer intern that was at Spotify built an audio analysis engine, and that audio analysis engine basically takes a look at the spectragram, yeah spectragram of the song, which is like the audio data laid out visually.

Corrin:

Right.

Circa:

It then analyzes that spectragram using a certain machine learning model where it takes a look at each, like sections of the spectragram piece by piece, and then runs its own crazy calculation on all that data to figure out, in a very backwards, non-intuitive way, "What is the key of this song? What's the tempo? How many beats? What's the pitch, the timbre, the chord progression, the melody? Maybe we can make some guesses as to the genre."

Corrin:

Wow.

Circa:

"The aggressiveness, the loudness." They can find all of that out based on this audio profile.

Corrin:

Right.

Circa:

Then they use that as data fed into the latent vector space. Every song in Spotify has this data, has something attached to the song ID that basically places this song better in latent vector space.

Corrin:

I love how that was an intern who did that, you know what I mean?

Circa:

Yeah, that was my favorite part.

Corrin:

Yeah.

Circa:

Yeah, we had an intern us this thing, and we use it.

Corrin:

And now it's implemented in all of our systems. I want an intern like that.

Circa:

Yeah, the machine learning developer world is nuts, but I'm sure it was a well-paid intern.

Corrin:

Of course, yeah.

Circa:

Or I hope. But yeah, so the only way that this actually happens, though ... Well, okay, so it can happen for tracks that you just release, but your song is not going to get on Release Radar in the first week because they don't know who to recommend it to. But after a little while, it will. But for a while now, they've been allowing you to "submit a song" for editorial playlist consideration.

Corrin:

Right.

Circa:

What I didn't know going into this is that that also, it allows them the seven days that they need. If you do it seven days early, it gives them the time they need to analyze your song spectragram, and then place it in the latest version of the model, of the engine.

Corrin:

Ah.

Circa:

Then they automatically include you in Release Radar in the first week based off of the audio data of your song. That's the only thing they're using to make those first week recommendations in Release Radar.

Corrin:

Oh. That's funny because when I came out with Charlatan, I put it in, I obviously submitted it, and it was like, "What genre?" And I'm like, "Well, it's a rock song that features a rapper." Then I started thinking, "What did they think that Linkin Park should be?" Danger Kids is the same kind of sound. They have rock mixed with rap and stuff. I'm like, "So what do you do when it doesn't fall ... " And I'm sure lots of Indies are like, "I'm not any of these genres." Right? Because everybody does. "I'm not like everybody else."

Corrin:

So it's interesting now, and it's good to know that there's more evaluation going on than somebody behind ... because I thought, I was like, "How in the world could they possibly listen through all these tracks and identify all these things about it?" I was like, "There's no way. There's thousands or millions or whatever it is, all these tracks being submitted every single day. How in the world are they even managing that?" So I really disregarded that process as something that was, "Nah, that's not going to do anything." And the fact that they've got a machine learning and evaluating, I mean, it's at least something, right? There's probably going to be a lot of artists who are like, "Well, I don't-"

Circa:

It's huge. We just did it for Dukes, and he got 1,000 first week Release Radar listeners.

Corrin:

Nice.

Circa:

It's crazy.

Corrin:

Nice, yeah, see, that's the kind of stuff that a lot of artists are probably like, "No, I don't want a machine listening to my stuff," or whatever, or, "A machine is not going to be able to evaluate my stuff properly." But it's like at least something is happening, right? And it's like, "Oh, you're not in a label, so screw you." You know? There's some intent behind that whole process, and that's a relief.

Circa:

Oh totally. They only built it to benefit people who would never be able to start up their streaming data otherwise.

Corrin:

Right, right.

Circa:

So it's purely for artists starting out, if you think about it.

Corrin:

Yeah, that's crazy. That's great. I love that.

Circa:

Yeah. That's not the only place that they make it fair. In the recommendations they make for your homepage, they include randomness. They might randomly recommend you an album from an artist who's not so popular, or just random. The most recent one was a Spotify developer named Oscar Stahl, I believe, I'll link to it in the show notes. But he talks about how they were building the most recent iteration of the homepage recommendation, which was previously the Discover tab, but now it's right there on your homepage. Every slot that's in those rows on your homepage has a chance of being just a random slot to allow for fairness.

Circa:

Then also, they've start to do this thing that Oscar talks about where they optimize for two different variables. So instead of like you might optimize your Facebook campaign to get a lowest cost per click, or lowest cost per video viewer, they're optimizing their recommendation engine to maximize user satisfaction and equity, like fairness to less popular artists, at the same time.

Circa:

So it's very difficult mathematically to optimize for two factors, but they're doing it.

Corrin:

Yeah, that's nuts. It's funny, too, because I always like ... There's certain things ... This is very separate from the business operations of Spotify, you know? And a lot of-

Circa:

Oh, way separate.

Corrin:

Yeah, like the way that ... And I've heard that, too, from people who work at Spotify, is like, "Okay, these segments of the genius of Spotify and the marketing of Spotify is very, very different." Spotify, their royalty payouts, and their complaints about being on the App Store, and all of these things where Spotify and Apple are fighting, it's always like this PR, or this negative press about Spotify, which is always why I've just been like, "I'm down on Spotify."

Corrin:

But when they say, "Oh yeah, well we try to promote ... This is a better opportunity for Indie artists than anywhere else," I've always been like, "That's baloney because there's a million reasons why I didn't believe them." But when you actually go over to the developer's side, you're like, "Okay, so maybe the business side of Spotify is pushing label and pop artists more or whatever because these labels own a big portion of Spotify, but at the same time, there are certain pieces in the algorithm that have to look at these things, and can't just ignore."

Corrin:

I mean, I guess it's possible that bigger artists are given more weight, somehow, in this algorithm, but I think that would be another variable that would be really difficult to work into all of the things they're already considering, from what you've explained. So now I'm like, "Well, maybe I need to learn more about this." You know, change my position a little.

Circa:

Well, I mean, at the end of the day, it's a miracle what they've done, but it's not good enough.

Corrin:

Right, right.

Circa:

It's not the final solution to the problem of paying artists appropriately for their artwork, streaming.

Corrin:

Right, that's a whole other thing.

Circa:

The current iteration of streaming is not it.

Corrin:

Right, right.

Circa:

But it's encouraging that they have such talented people who are earnestly thinking about these challenges. It's so cool that they take so much time to build in equity for unsigned and undiscovered artists. They spend a lot of time on it. They build their products around it. So that's all encouraging.

Circa:

Aside, we know a lot of shady shit about the business of Spotify.

Corrin:

Right.

Circa:

Including their move to podcasts, and the real reason for it. But the developer side is actually really cool, and what they've done is massive. So ultimately, the overarching strategy for any Indie on Spotify is generate streaming data, which is like you need to generate listens, and streams, and followers who are the right people. Right? That's most important. They have to be people who actually, through no coercion, love your music.

Corrin:

Yeah.

Circa:

That's so important, otherwise you're never going to get recommended to the right people.

Circa:

And we haven't even talked about this, so let's just talk about this. Spotify's ... we talked about exactly how Spotify gets to the point where they're ready to recommend to you, but what happens then?

Corrin:

Right.

Circa:

That's what just really matters, is if you're in Discover Weekly for a batch of 100 users, and you don't get positive, unambiguous positive signals from them, like liking a track is an unambiguous positive signal, like if it happens it's clearly a positive, skipping a track is an ambiguous negative signal, so they don't know if someone skips your track that it's bad, but adding a track to your playlist, that's an unambiguous positive signal. So if they don't get those kinds of signals from the first batch of users, you're going to die on Discover Weekly.

Corrin:

Right. That's so funny because when I listen to Discover Weekly and I hear a song that I don't like, I skip it thinking, and I rush to skip it before it hits 30 seconds, not because I don't want that artist to get paid, but because I always just assumed because that's the marker they use for payment, that that was a significant place where I need to skip it before then so that they don't recommend things like that to me anymore. Now, I realize that that might not be a thing.

Corrin:

The other thing is I never like tracks. I have a playlist that, if you want to see how stupid and weird my tastes are, I have a playlist called Stuff I Like Lately, and there's some old stuff on there that I've liked for a long time. And every kind of thing is on ... I've got Katy Perry and Ariana Grande on there, but I've also got like Asking Alexandria and Zedd and Bass Nectar and whatever. Right? That's the stuff I listen to, and I don't generally listen to mixed stuff. But every once in a while, I'm like, "Okay, this playlist is done."

Corrin:

So I'll listen to Discover Weekly for the day, and I won't like songs, but I'll drag them over to the Stuff I Like Lately podcast, which I listen to on repeat. I have that one, and then I have a playlist called Music for Badass Tech and Coding, which is mostly just like electronic and drum and bass stuff that doesn't have a lot of words in it so that I can focus on the thing that I'm doing, so I don't have words, and I'm not analyzing a song for a hook or something.

Corrin:

Those are the two playlists that I have, but that's usually what I indicate to Spotify that I like something, instead of the like button. So is that in an unambiguous category as well?

Circa:

If your behavior is ... from what I can tell, especially from Oscar's presentation, is that Spotify is looking at different types of listeners, and it's categorizing you. So if your behavior is consistent, then you're contributing accurate positive and negative signals. So Spotify might learn about your user ID that you are someone who automatically will skip anything they don't like, 100%.

Corrin:

Okay, okay.

Circa:

Then you're good. But if you put on songs that, if you listen to a lot of playlists that have a lot of just like leave it on and you're not near the device and you don't skip those, then Spotify can't necessarily say for sure that you not skipping a song is a positive signal.

Corrin:

Right.

Circa:

That make sense?

Corrin:

Yeah, totally makes sense.

Circa:

So it trains on your behavior.

Corrin:

Yeah. I listen to the New Alt, that's another one that I really like, if you like alternative rock, or even alternative pop. That's a good one. And it doesn't pop up. I'll listen to it, and I'll drag stuff over to my playlist from there, and lately, it's shown me stuff that I really do like, whereas before my Discover Weekly was off.

Corrin:

So yeah, I guess, so you're saying that taking any action of a deliberate kind, aside from skipping, it could communicate something, whereas passive listening is not as much of an indicator, which means if you're on a playlist that's ambient, it's not necessarily that it's bad, but it's also not necessarily giving Spotify a lot of signals if it's just like a playlist where no one's really taking any kind of action.

Circa:

Right. Well, yeah, so there's a lot of different ways that Spotify can calculate positive and negative feedback, but it's not a stupid system. Right?

Corrin:

Right.

Circa:

It doesn't just blanket-

Corrin:

It's not just pre-saves, which is what they're always telling everybody to worry about the most.

Circa:

Get those pre-saves. Another thing is, think about it like this. When you pre-save, as far as I understand the technology works like the technology you're using to get pre-saves saves the user ID and the permission to add the track when it's released to their playlist, and like it from them.

Corrin:

Right, right.

Circa:

So it's not actually doing it when they "pre-save" it, it does it literally at the moment it comes out, right?

Corrin:

Mm-hmm (affirmative), yeah.

Circa:

So what you have is a flood of ... Let's think about it like this. The best quality data you could have for Spotify to train on is data of streams from the exact people who actually love your music, and no one else, specifically they love the song that they streamed.

Corrin:

Right.

Circa:

That's the best quality data you can get.

Corrin:

Yeah.

Circa:

And it gets more fuzzy out from there. One particularly fuzzy set of data is a bunch of saves from people who have never even listened to the song yet.

Corrin:

Yeah, and especially if they don't listen to it.

Circa:

How is a machine learning model ...

Corrin:

Yeah, if they don't listen to it later that week after it's been saved, then there's really ... why would that be valuable?

Circa:

I love Reel Big Fish, right?

Corrin:

Yeah.

Circa:

But there's tons of their catalog that I don't like, and if you recommend me a bunch of songs that are like those songs, I won't like it.

Corrin:

Right.

Circa:

But if Reel Big Fish sticks their ad in front of me and says, "Hey, pre-save this track. It'd help us out a lot," I would do it. But that's not giving good data.

Corrin:

Gotcha.

Circa:

Anyways, they don't specifically say that they root out pre-saves from the machine learning model, and the business side of Spotify is always saying to get your pre-saves, but as far as I understand it, this machine learning model is constantly learning, and it's not so obvious how it's learning. So it might just start to root out things without being told to by developers, because it's a neural network. If it's good enough, it should root out pre-saves because it's fuzzy data. It will eventually root them out or weight them differently.

Corrin:

Yeah.

Circa:

So a save without a listen is not necessarily a great thing, in my opinion.

Corrin:

Yeah. And it's possible, too, we're saying that the development section of Spotify is so different from the business section, it's possible that the business section isn't totally listening to developers, or that if they are like, "Hey, send people to pre-save their stuff," in a lot of cases, that's sending people to Spotify, right? So their monthly activity, their active users goes up, even if that person isn't necessarily, if they're more like I used to be, where they're more of an Apple Music user than Spotify, but they love the artist so they're going to try to help them out to pre-save. So it could be the business side trying to get their monthly active users up, try to get people back into the infrastructure so that they can sell them on a paid membership. You know what I mean? There's so many business things that that could be motivating that have nothing to do with the algorithm.

Corrin:

I'm not saying that's how it is, but I could see how that could be true, that pushing pre-saves from the business side, or the marketing side, or the PR side of Spotify may or may not be connected to that actually being valuable in the algorithm.

Circa:

Yeah. I mean, when you start down that line of thinking we just started down, it's easy to see how saves might not be so tight.

Corrin:

Right.

Circa:

Because they're very ... because they're something that artists seek to game, they may not be so unambiguous.

Corrin:

Or even if they are unambiguous, maybe they have a much lower weight when it's considering all these variables. It might be something that just doesn't weigh as heavy as other actions.

Circa:

Yeah. What's clear to me is that the only way for something to really take off from a recommendation standpoint is, one, you have a hit, like a classic music industry hit, right?

Corrin:

Yeah.

Circa:

And everyone's listening to it. That's great, sure, it's going to take off because it's just going to have such a high popularity ranking out the gate. It's going to be related to songs that are just popular, not necessarily by genre, but just because of their obscene popularity. So that's one way, but the second way is to, at the moment that you become eligible for wide distribution on Discover Weekly and Release Radar, the data that feeds those recommendation models is so fucking accurate that literally everyone it recommends to is like, "Fuck yeah, dude," streams it like 30 times immediately. That will immediately tell Spotify, "You found the money. Give this the widest distribution."

Circa:

So to me, it's like, "We should be pre-qualifying people." Instead of trying to get people to go to Spotify, you should be stopping people from going to Spotify unless they really like what they're about to get sent to.

Corrin:

Yeah. That's crazy because we talk about that in other things in marketing, right? Like excluding the wrong people, having the right message for the right people. And no offense to anybody, but I exclude Indepreneur engagement from my music promotion, not because I hate you guys, but because I don't want to have a bunch of-

Circa:

It's fuzzy data.

Corrin:

Yeah, exactly, it's fuzzy. Even though some of you might like my music, hopefully all of you, but it's funny that that is completely supporting the same thing that we say about so many other elements of marketing, you know? It's like get the right people or else this action or this activity is now not worth all the effort you put into it.

Circa:

Yeah. Okay, so that's ... I just described this really advantageous way that you could end up on Discover Weekly, where all your data is right, it's exactly right. Every person who's ever streamed your track absolutely loves it, they weren't doing you any favors.

Corrin:

Right.

Circa:

Okay, now think about it like this. The pool that you're in matters. So if all that's true but you're doing, let's say like the intersection of Celtic folk music and ska, right? You have a pretty niched down audience.

Corrin:

Right, right.

Circa:

We were at CD Baby recently, and I believe there was, at one talk, someone said, "Can you niche down too much? Yes."

Corrin:

Yeah.

Circa:

You 100% can niche down too much, yes. So you don't want to be in the smallest pool. You want, for instance, let's say that only half of a given sub-genre is going to ever like your music a lot, enough so that when they're recommended it they love it. Would you rather that sub-genre have 100,000 people, or 2,000 people? Because you want to be swimming in a pool that's relatively large enough. Not so large that there's a high chance that people won't like this specific song, but large enough that there will be a lot of right people in it. And if you're niching down and excluding elements of your artistry to fit a smaller niche, don't do that.

Corrin:

Yeah. Well, okay, so take that example, like Celtic music, but with a ska edge, right? People who like Celtic music wold not necessarily like your stuff. In fact, most of them probably wouldn't.

Circa:

Right.

Corrin:

And then some ska people would like your stuff, but a lot of them wouldn't.

Circa:

Not all.

Corrin:

Right, and so it's funny because that's what we talk about with ads, too, is you want a broader audience so that you're giving Facebook more data to find the right people. If you make it too small, then Facebook has to, it's limited to only the people that you're allowing them to survey or analyze. In Spotify, it's the same thing. If you have more people, it's getting more meaningful data because it's enabled to find the right people. Yeah, that's great.

Circa:

Yeah. You can be the world's foremost expert on putting on your clothes quickly. That's pretty niche. Or you can be the world's foremost expert on putting on your underwear quickly. That's too niche. You know?

Corrin:

Nobody wants that. Nobody wants it.

Circa:

Yeah, it's not enough things. You can't build a career off that.

Corrin:

Right. You wouldn't want to put on your underwear really quickly to get out the door unless you've got some big personal life problems, but for the most part-

Circa:

Yeah, exactly.

Corrin:

... you want to put on all your clothes first.

Circa:

Yeah. I mean, if your maximum possible safe distribution for Discover Weekly is like 2,000 people, it's a definite ceiling, and once it hits that ceiling, Spotify doesn't know necessarily where to put you.

Corrin:

Right, right.

Circa:

So don't be like acid jazz hiphop funk punk.

Corrin:

Right.

Circa:

It's okay to be acid jazz.

Corrin:

I mean, do, and let me listen to it, but beyond that, because I want to know what that sounds like.

Circa:

Oh yeah, and all I mean is don't niche down any further than is your artistic soul.

Corrin:

Right.

Circa:

If your artistic soul is that niched down, dude, that's your circumstance, you have to deal with it, that's your truth. But down niche down because some marketer told you to do it more. Don't do that.

Corrin:

Agree. Agree, totally.

Circa:

And there's a very clear recommendation based reason why you shouldn't. But yeah, ultimately it's like feed Spotify clean data. Right now, for the last four months, or five months, I've been running consistent traffic on a single song that's not on Spotify yet. It's releasing this Monday. I submitted it for the Release Radar audio analysis. I used my fan finder data, so I looked at my fan finders that's been running, and said, "What's the best audience, like the artists that their fans all love this song?" And then I went into Spotify's developer API, which you can go to developers.spotify.com, and you can use it, like as a toy. I just looked up those artists, and found out what Spotify thinks their genre is, and then when I submitted my song to Spotify for playlist inclusion, I used those genres.

Corrin:

Nice.

Circa:

Then, yeah, so now I've got about, I've got over 1,000 or 2,000 people who all have been asking for the Spotify song.

Corrin:

Wow.

Circa:

They've seen the video, they love the video, they want to hear the song. The only marketing I'm going to do is talking to those people, because I know they already love the song, they're going to go into Spotify and stream it a bunch. And they're the exact right people for it. Facebook already did all that pre-selection for me. So I'm not going to run ads to a cold audience to go to this song, because I'm really trying to feed the right data in.

Circa:

I think that's the best approach here, is feed the right data in, and once you get to a certain size, you can start worrying about things like playlist placement, blog PR. But start with your own audience that you're building, and then spin it out to, I would go with playlist placement first, just because it's going to have more of a "return" than blog PR at first.

Corrin:

Right, right.

Circa:

And expect this is a two to three year investment that you make before I see people get successful with it.

Corrin:

Right.

Circa:

So definitely, be in it for the long haul. I know that I'm probably not going to make back the money that I've spent getting people to this single I'm about to release. I mean, I might, but probably not, but that's okay. I'm just trying to build up my audience right now, and feed the right data to Spotify so that when I hit an inflection point, I'm not being recommended to tons of Filipino people who just listen to whatever they're paid to listen to.

Corrin:

Right.

Circa:

Does that make sense?

Corrin:

Yeah.

Circa:

Like Filipino click farm workers who are just paid to listen to a whole smattering of unsigned artists because that's who's buying these fake streams, or who's advertising to these playlists. You know?

Corrin:

Yeah.

Circa:

So you want it to be all the right people so that when it gets recommended, it's going to be to people who show Spotify they love it.

Corrin:

Right. That's why you're saying this is a long tail thing, especially if you're spending any money advertising, especially if you're doing it to a cold audience. It's going to be really difficult to screen out the right type of unambiguous action, the people who will take these unambiguous actions.

Circa:

Yeah, definitely. Yeah, yeah, and what matters more, like if you have a strategy that will get you 1,000 right listeners, or 10,000 fuzzy listeners, in terms of data, go with the 1,000 right listeners.

Corrin:

Right, right.

Circa:

Build a sturdy structure because when it pops off, it will fucking explode. Like I'm watching someone who's done some very good marketing for their Spotify right now, I'm looking at their growth curve from the Since 2015 data, and it's a straight line up now. You know?

Corrin:

Right. Yeah, totally. That's crazy.

Circa:

So yeah, that's the Spotify recommendation engine. I hope that made sense to everyone.

Corrin:

I think we drilled it down. Because I'm not that into this either, you know? I'm not like you, who's mapping out a matrix on my whiteboard, you know what I mean? Or whatever it is you're doing with it. So yeah, I think we drilled it down to some laymen, some smart musician terms.

Circa:

Yeah, and now if someone tells you this or that about the recommendation engine, you can be like, "Actually, it's this."

Corrin:

Actually ...

Circa:

Yeah. Actually, this is how it works. So yeah, I hope that was helpful for everyone, and I hope it informs your longterm strategy as to promoting your growth on Spotify. Yeah, next week we'll be in here to talk about ads, direct to Spotify ads, and how you might use them. Hopefully this has been a good primer for that, hopefully we're in a good place to do that.

Corrin:

Yeah.

Circa:

Rock and roll. Well yeah, if you guys want to check out this Spotify training, it will be available in about three weeks standalone, but you can also just go to Indepreneur.io/episode103, and I'll leave a link to a trial for IndiePRO if you're not an IndiePRO member yet, and you can take it now for $1.00. We'll see you guys next week on Creative Juice. Peace out.

Circa:

(singing)